The impact of A/B testing is created along 2 dimensions, potential impact of any experiment X number of experiments run. In the early days, you’re potentially a team of one, and there are limits on the amount of things that you can test. Once you move a little bit into the process side of the world, you can operate on Sean Ellis’ idea of High Tempo Testing. I know I want to test different ad copy, different landing pages, different imagery. All of these are good to do, and they can absolutely result in positive gains. Plus, the upside to all of this type of testing is that you can do it yourself.

Early on at a startup, oftentimes, a 10% gain might only result in 1 extra lead, or 1 extra sale. While, it’s clear that over time, these incremental improvements can add up, they’re really most effective from a large base. You need to do things that can have 50%, 100%, 200%, 500%+ improvements over the status quo, and I’ll bet you that’s not a different landing page image.

To illustrate these big picture items, I want to walk through 3 huge tests that I ran with Speek. We were not great at maintaining tempo of testing, but we were good at looking for high impact experiments.

1) Self-serve or Inbound Sales Team.

After a major site redesign, Speek was able to handle self-signup teams (our MVP required a salesperson to setup a team in the system). Once we did that, we needed to decide whether self-signups were optimally left alone with an automated on-boarding and drip or an inbound market response rep (MRR) and sales process. For two weeks we ran half of the leads through our best MRR. The results here were overwhelmingly positive for the sales team. In that first month, 90% of the closed seats came through the leads that were answered by an MRR. So, we went quickly with that side of the test.

It’s worth noting here that over time, the self serve leads did close a few more, but both the sales cycle time and and close rate were much higher. Obviously the salaries to the MRR and the Account Executive represent a significant cost, but for us, with top-line revenue growth as the key metric, the acceleration of cycle time and deal size made it a no-brainer.

2) Credit Card Before Trial or After.

Now sometimes your tests won’t work, and that’s fine. Frankly, it’s part of why you need the ones that do work to work really well. After a conversation with Joel Holland (Founder of Videoblocks), I learned that their cashflow went up 10x and revenue went up 4x when they started requiring a credit card up front for their free trials. They’re a B2C product, with a self signup model, unlike ours, but really, that type of potential swing in your business is something that you can’t pass up the opportunity to experiment with. For Speek, it didn’t work -> trials went down roughly 75% for the CC up front group, which left the inbound sales rep twiddling his thumb a bit too much. Even with a slightly higher conversion rate, it couldn’t make up for the drop in top end. Lesson Learned.

3) Pick between outbound sales tactics.

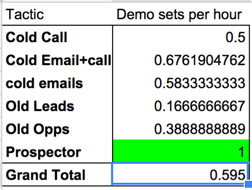

One of the most non-standard experiments we ran was all about testing time efficiency. One of the most valuable resources in the company was the time spent on any particular project or tactic by our lead SDR. It was almost possible to project future revenue based on his productivity from the prior month. So, making his time more efficient was critical. What tactics work best? What lead lists work best? The only way to really know is by running an experiment. Check out these results after 3 weeks:

What each tactic represents isn’t super important (other than the shoutout to the guys at SalesLoft) but look at those results. After 3 weeks, we could see that one tactic in particular – and luckily a scalable one – is almost 50% more effective than any other.

When you’re looking at experiments early on at a startup, when you have limited time, limited resources, and too much to do, just make sure that you focus your efforts on experiments with the potential for a big win.